最近用户反应当向OnceDB插入1500多万条数据,后插入的数据无法读取,并且微软的Redis版本也有这个问题,我们写了个测试脚本重现了这个BUG:

var OnceDB = require('../oncedb')

var oncedb = OnceDB()

var assert = require("assert")

var schema

var init = function() {

oncedb.init({ select: 0 }, function(err) {

if (err) {

console.error(err)

return

}

testHmset()

})

}

var testHmset = function(done) {

console.log('添加2000万条数据')

var current = 0

var next = function(err) {

if (err) {

console.log(err)

return

}

current++;

if (current > 20000000) {

console.log('done')

return

}

if (current % 10000 === 0) {

console.log(new Date(), current)

}

oncedb.client.hmset('user:' + current, {

name: 'kris' + current

, age: current

}, next)

}

next()

}

init()

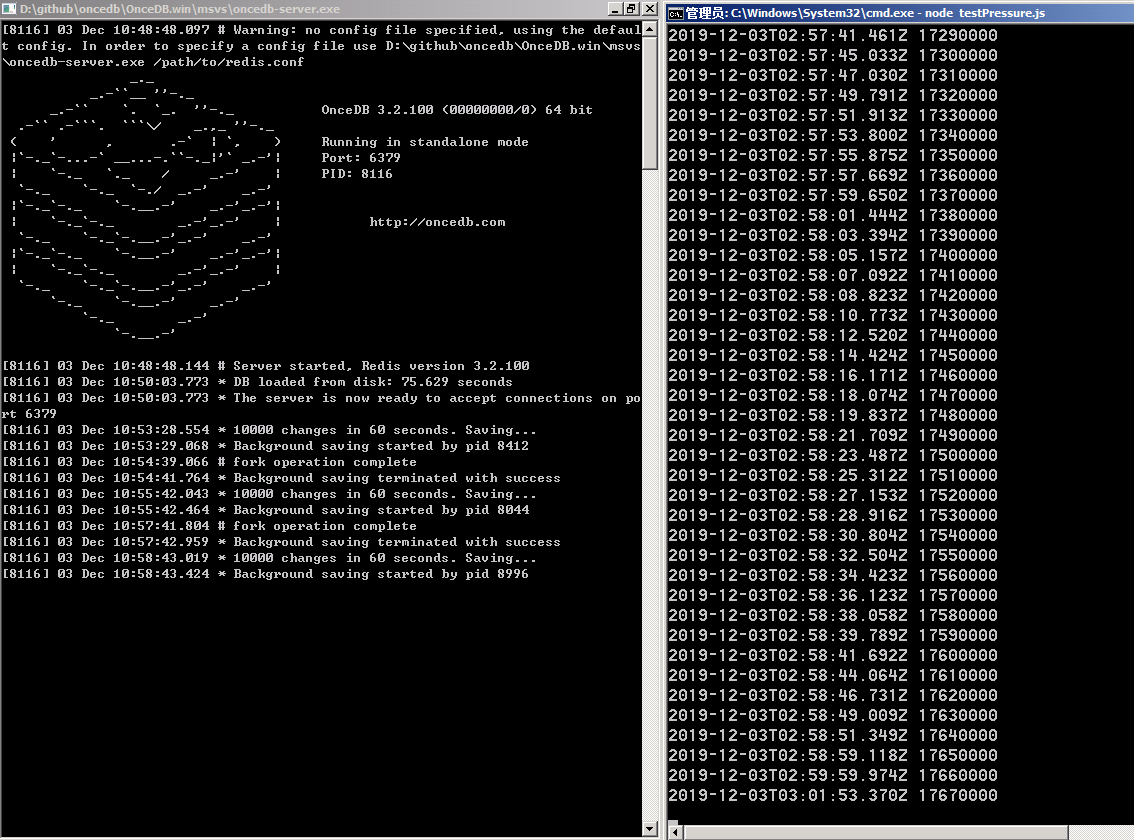

当插入到大约1380万条左右时,命令行显示db持久化失败,命令行提示如下信息:

The Windows version of Redis reserves heap memory from the system paging file

for sharing with the forked process used for persistence operations.

At this time there is insufficient contiguous free space available in the

system paging file. You may increase the size of the system paging file.

Sometimes a reboot will defragment the system paging file sufficiently for

this operation to complete successfully.

根据调查,Windows Redis 采用 MapViewOfFileEx来将内存映射到磁盘保存。

src\Win32_Interop\Win32_QFork.cpp

BOOL QForkChildInit(HANDLE QForkControlMemoryMapHandle, DWORD ParentProcessID) {

....

vector<SmartHandle> dupHeapHandle(g_pQForkControl->numMappedBlocks);

vector<SmartFileView<byte>> sfvHeap(g_pQForkControl->numMappedBlocks);

for (int i = 0; i < g_pQForkControl->numMappedBlocks; i++) {

if (sfvParentQForkControl->heapBlockList[i].state == BlockState::bsMAPPED_IN_USE) {

dupHeapHandle[i].Assign(shParent, sfvParentQForkControl->heapBlockList[i].heapMap);

g_pQForkControl->heapBlockList[i].heapMap = dupHeapHandle[i];

sfvHeap[i].Assign(g_pQForkControl->heapBlockList[i].heapMap,

FILE_MAP_COPY,

0,

0,

cAllocationGranularity,

(byte*) g_pQForkControl->heapStart + i * cAllocationGranularity,

string("QForkChildInit: could not map heap in forked process"));

} else {

g_pQForkControl->heapBlockList[i].heapMap = NULL;

g_pQForkControl->heapBlockList[i].state = BlockState::bsINVALID;

}

}

src\Win32_Interop\Win32_SmartHandle.h

T* Assign(HANDLE fileMapHandle, DWORD desiredAccess, DWORD fileOffsetHigh, DWORD fileOffsetLow, SIZE_T bytesToMap, LPVOID baseAddress, string errorToReport)

{

UnmapViewOfFile();

m_viewPtr = (T*) MapViewOfFileEx(fileMapHandle, desiredAccess, fileOffsetHigh, fileOffsetLow, bytesToMap, baseAddress);

if (Invalid()) {

if (IsDebuggerPresent()) DebugBreak();

throw std::system_error(GetLastError(), system_category(), errorToReport.c_str());

}

return m_viewPtr;

}

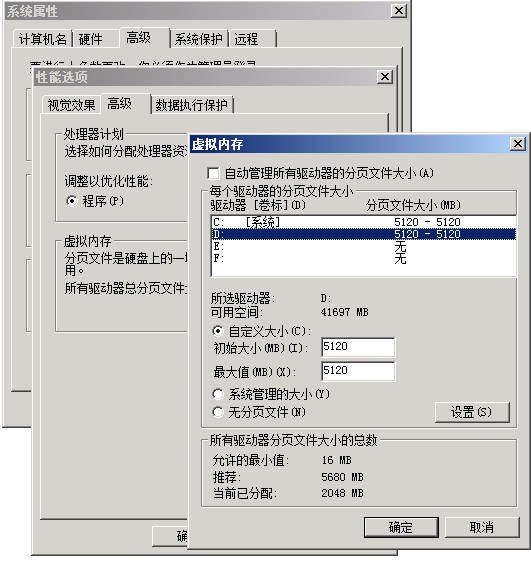

这种方法需要系统虚拟分页有足够的连续存储空间,如果磁盘碎片过多,或系统分页过小,则可能会保存失败。

解决方法

1. 设置系统虚拟内存

2. 重启后进行磁盘碎片整理

3. 重启OnceDB 或 Redis 启动测试脚本,数据超过1700万条保存成功